No, there isn’t a typo in the headline.

The “o” in “Chat GPT-4o” stands for “omni”.

Today, OpenAI introduces GPT-4o, which is a step towards a “much more natural human-computer interaction”.

The omni model accepts input of any combination of text, audio, and image and generates any combination of text, audio, and image outputs.

OpenAI says the omni model is particularly better at vision and audio understanding compared to existing models.

You’ll understand how good it is at this in a bit.

The model also matches GPT-4 Turbo performance on text in English and code.

On top of that, the model comes with great improvement on text in non-English languages, which previous models struggled with.

So once GPT-4o is available for use, you could try conversing with it in Chinese, though I doubt it will understand the “lah”, “leh” and “lorh”s of Singlish.

Oh, wait, maybe it does.

Like You’re Interacting With A Human

Notably, GPT-4o is able to respond to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds, which is similar to human response time in a conversation.

This means that unlike other AI, you won’t have to wait for an answer. GPT-4o’s answer is almost instantaneous, making it feel as if you’re actually conversing with a human.

AI gets freakier every year- no, every month.

Prior to this model, ChatGPT users could use Voice Mode to talk to the AI with latencies of 2.8 seconds (GPT-3.5) and 5.4 seconds (GPT-4) on average.

The process used by these two models causes the main source of intelligence, GPT-4, to lose a lot of information. It can’t directly observe tone, multiple speakers, or background noises, and it can’t output laughter, singing, or express emotion.

To counter this, OpenAI trained a single new model end-to-end across text, vision, and audio, meaning that all inputs and outputs are processed by the same neural network, making GPT-4o the company’s first model combining all of these modalities.

That’s a lot of chim terms and nerd talk, which you probably don’t care about.

What you’re most likely concerned with is when GPT-4o will be released and what it can do, right?

Well, OpenAI hasn’t exactly been clear with when exactly the omni model will be available for use.

CEO Sam Altman did say, though, that this “new voice mode will be live in the coming weeks for plus users.”

GPT-4o will be first available to ChatGPT Plus and Team users, with accessibility for Enterprise users arriving after that. ChatGPT Free users will also be able to access the model, though there will be unspecified usage limits.

Currently, you can use GPT-4o on the browser.

“Plus users will have a message limit that is up to 5x greater than Free users, and Team and Enterprise users will have even higher limits,” the company said.

With that said, let me take you through GPT-4o’s capabilities.

It Can Interact With Humans And Other AI

A Conversation Between GPT-4o On Two Phones

AI is literally becoming human with GPT-4o.

In a video demonstration, GPT-4o (GPT-4o #1) is talking to a man before he interrupts it.

“Could you just pause for a moment?” the man says, before GPT-4o #1 stops talking and says: “You got it.”

I’m getting goosebumps.

He then talks to GPT-4o on a separate phone (GPT-4o #2) and says to it: “What do you see?”

GPT-4o #2 responds with: “Hello, I see you’re wearing a black leather jacket and a light coloured shirt underneath.”

The man then tells the two GPT-4o to talk to each other.

GPT-4o #1 asks GPT-4o #2 to describe what it’s looking at, and GPT-4o #2 responds by describing the man as well as his surroundings.

Here’s the next part of their conversation:

GPT-4o #1: Can you tell me more about (the person’s) style? Are they doing anything interesting like reading, working, or interacting with the space?

GPT-4o #2: The person has a sleek and stylish look with a black leather jacket and light coloured shirt. Right now, they seem engaged with us, looking directly at the camera. Their expression is attentive and they seem ready to interact.

GPT-4o #1 then asks GPT-4o #2 to talk about the room’s lighting.

GPT-4o #2 is answering when a woman enters the scene and stands behind the man. She does a few funny actions before leaving.

The man then interrupts GPT-4o #2, saying “Did anything unusual happen recently?”

GPT-4o #2 stops talking to listen to the man before answering.

“Yes, actually. Another person came into view behind the first person. They playfully made bunny ears behind the first person’s head and then quickly left the frame,” GPT-4o #2 said.

If this doesn’t convince you that AI is turning into humans, I don’t know what will.

You can watch the full video of the interactions between the two AI and the man here:

GPT-4o Can Laugh and Make Jokes

In another video demonstration, a man chats with GPT-4o about his upcoming presentation.

“In a few minutes, I’m gonna be interviewing at OpenAI. Have you heard of them?” the man says.

“OpenAI? Huh? Sounds vaguely familiar!” GPT-4o replies in a playful tone, and even giggles.

“Kidding, of course. That’s incredible Rocky!” GPT-4o continues.

GPT-4o Can Be Sarcastic

In yet another video demonstration, a man asks GPT-4o to be sarcastic.

“Everything you say from now on is just gonna be dripping in sarcasm,” he says.

“Ohhhh, that sounds just amazing. Being sarcastic all the time isn’t exhausting or anything, I’m soooo excited for this,” GPT-4o replies in a sarcastic tone.

And its tone really was dripping in sarcasm.

There are 13 other video demonstrations showcasing the audio abilities of GPT-4o, such as teaching math, singing a lullaby, and interacting with GPT-4o on another phone as if they’re working in customer service.

Other than mind-blowing abilities on the audio-front, GPT-4o comes with other notable capabilities.

Visual Narrative

Unsurprisingly, GPT-4o has the ability to generate images based on text prompts entered.

Crazy how we’ve progressed so far in AI that this ability is no longer shocking.

On OpenAI’s website, they tested GPT-4o in a number of ways.

They found that the omni model is capable of generating images, movie posters, and even assisting in character designs. GPT-4o is also capable of creating caricatures from uploaded images and 3D object synthesis.

Furthermore, GPT-4o is capable of using information entered from previous prompt entries.

This might be a little difficult to explain, so I’ll demonstrate it this way:

User: Generate an image of a cat wearing jeans.

GPT-4o: *Generates image*

User: Make the jeans black

GPT-4o: *Generates new image*

You get what I mean? The user does not need to type “Generate an image of a cat wearing black jeans” when he wants to edit the image.

Such a time-saver, how neat is that?

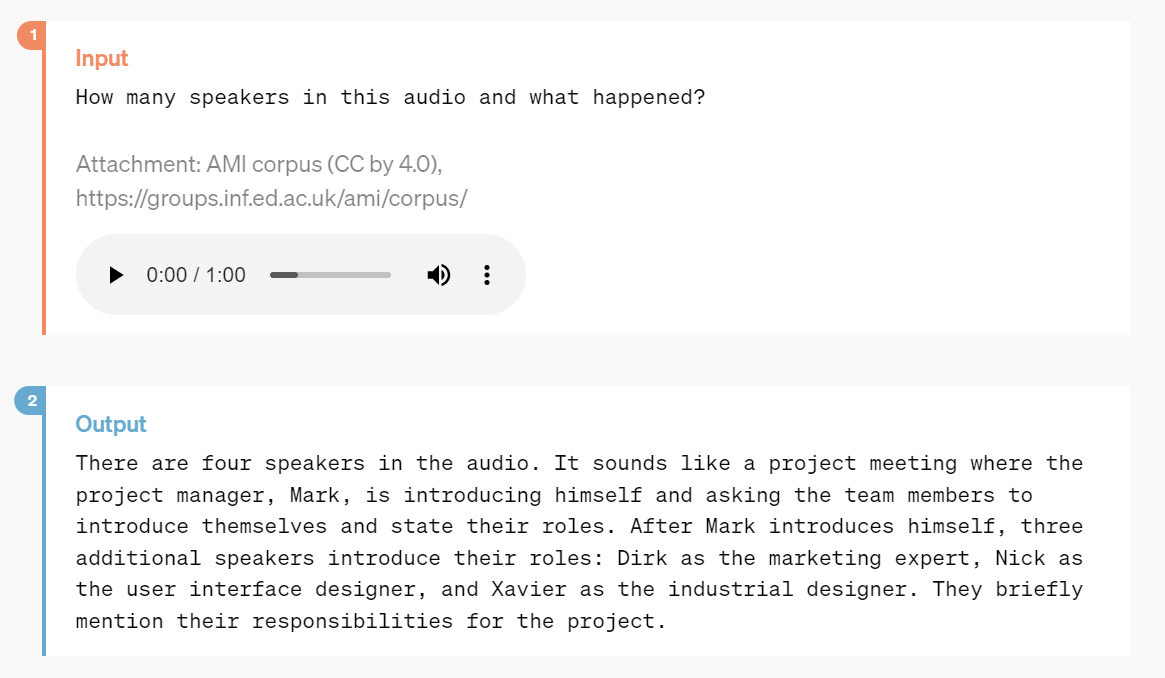

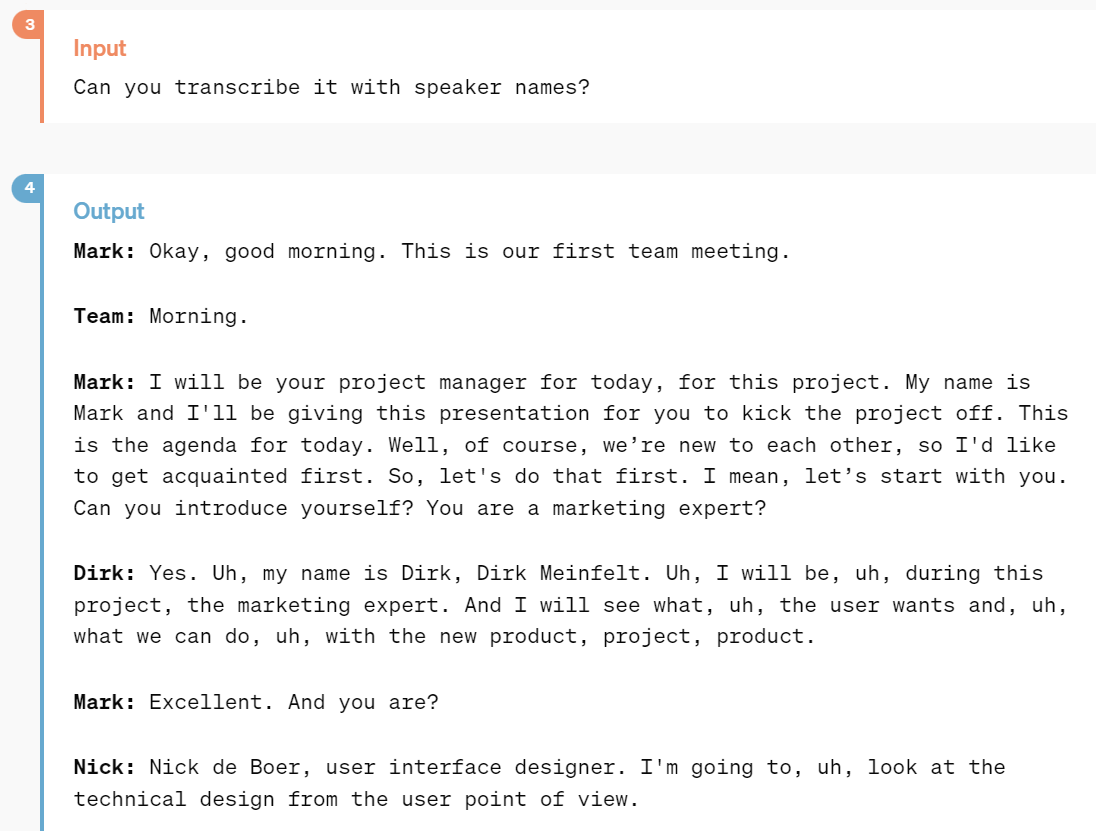

Meeting Notes With Multiple Speakers

A round of cheers could be heard when office workers found out about this.

Well, not really, but it’s pretty great that GPT-4o comes with this feature.

The omni model will be capable of making meeting notes based on audio recordings.

GPT-4o will also be able to identify the number of speakers in an audio, as well as generate a transcription with speaker names if the speakers introduced themselves in the recording.

This could mean significant savings on time for those who need summaries of meetings or focus groups.

It’s not only a time-saver, but an energy-saver as well, allowing companies to better allocate their human resources.

Lecture Summarisation

This one’s for the JC, polytechnic, and university kids – GPT-4o is able to summarise your lectures.

Reader: Not like I ever watch them anyway-

The new model will be able to provide a summary of a lecture based on a video uploaded.

When testing the model, OpenAI’s team uploaded a 45-minute long presentation video and asked GPT-4o to generate a summary. The omni model generated a 431-word summary, which would take between 2 and 3.5 minutes to read.

That’s up to 96% savings on time!

Moreover, the summary wasn’t just a long block of text. GPT-4o wrote the summary in point form with headers for easier reading and navigation.

We’ve heard a lot of updates from OpenAI and Google this year on their AI models, but this update no doubt takes the cake.

I mean, did you even see how human-like their interactions were in the demonstration videos?

It’s literally so freaky and unbelievable that AI has come this far in such a short period of time. It’s almost crazy to even think about what the AI landscape could look like in just one year.