Google’s Gemini AI Video Demo Sparks Controversy as Authenticity Comes into Question

It has come to light that Google’s impressive Gemini AI demo is not as it initially appeared.

On 6 December, Google unveiled Gemini, its most powerful AI model, entering the competition alongside renowned chatbots like Open AI’s Chat GPT-4.

Gemini boasts multimodal capabilities, enabling it to generate and comprehend an unprecedented range of data types including text, audio, video, images and even code. What’s even more astounding is that Gemini demonstrated solving mathematical reasoning problems, dissecting complex scientific data, and mastering advanced coding tasks.

To promote its launch, a series of video demonstrations were released to highlight Gemini’s capabilities. If you’ve watched the initial hands-on video demonstration, you may have been wowed to the point where you might think that AI is taking over the world.

However, the video, which garnered two million views in just two days, has since been mired in controversy. It turns out that the demonstration was not entirely real.

Google has Admitted that The Video was Edited

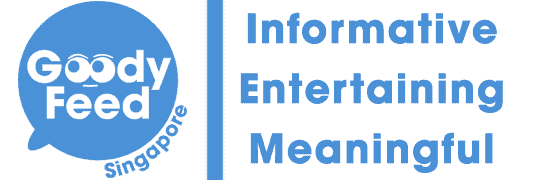

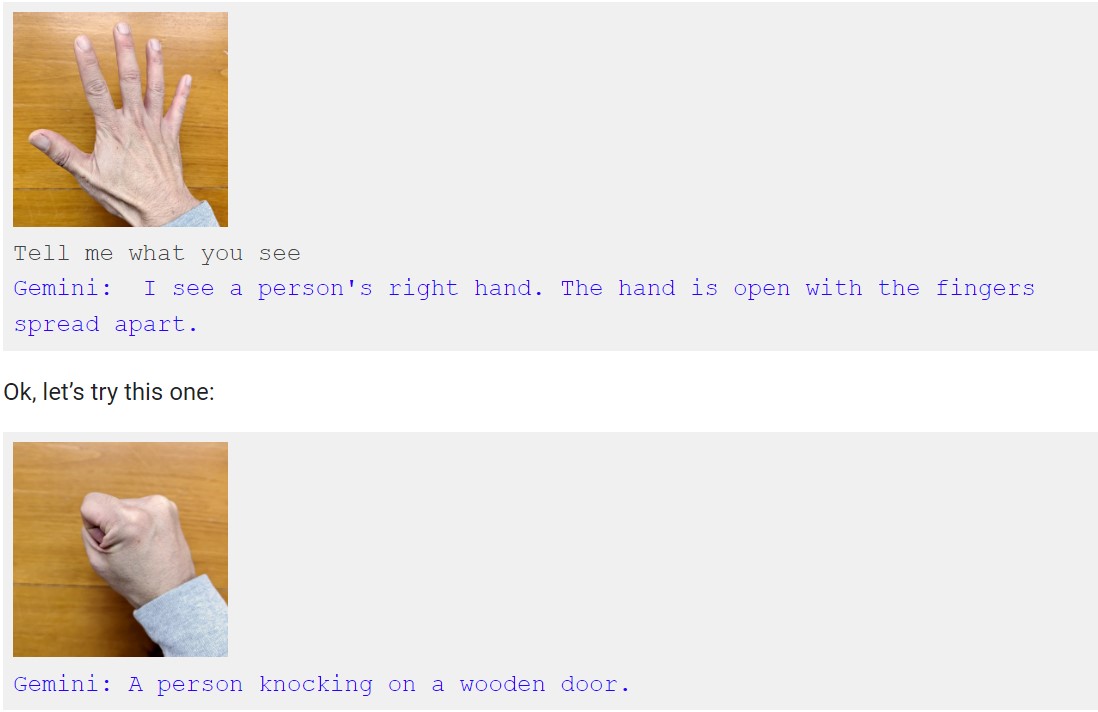

The video portrays a user engaging in a seamless interaction with Gemini. It begins with a multimodal dialogue, where the user places a paper on a table and draws a duck, prompting Gemini to analyse the drawing until the final product was revealed.

The user then inquires about how to say duck in another language, showcasing Gemini’s multilingual abilities.

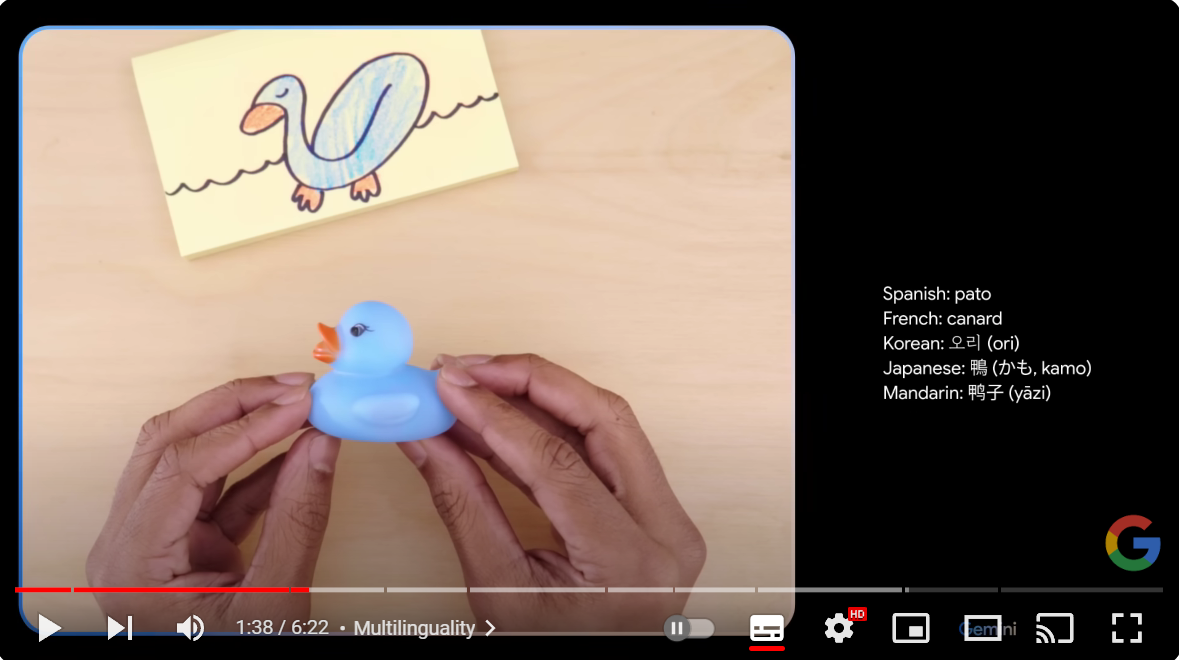

The user even tests Gemini with visual puzzles by placing a paper ball under one of three cups, and Gemini accurately guesses the location.

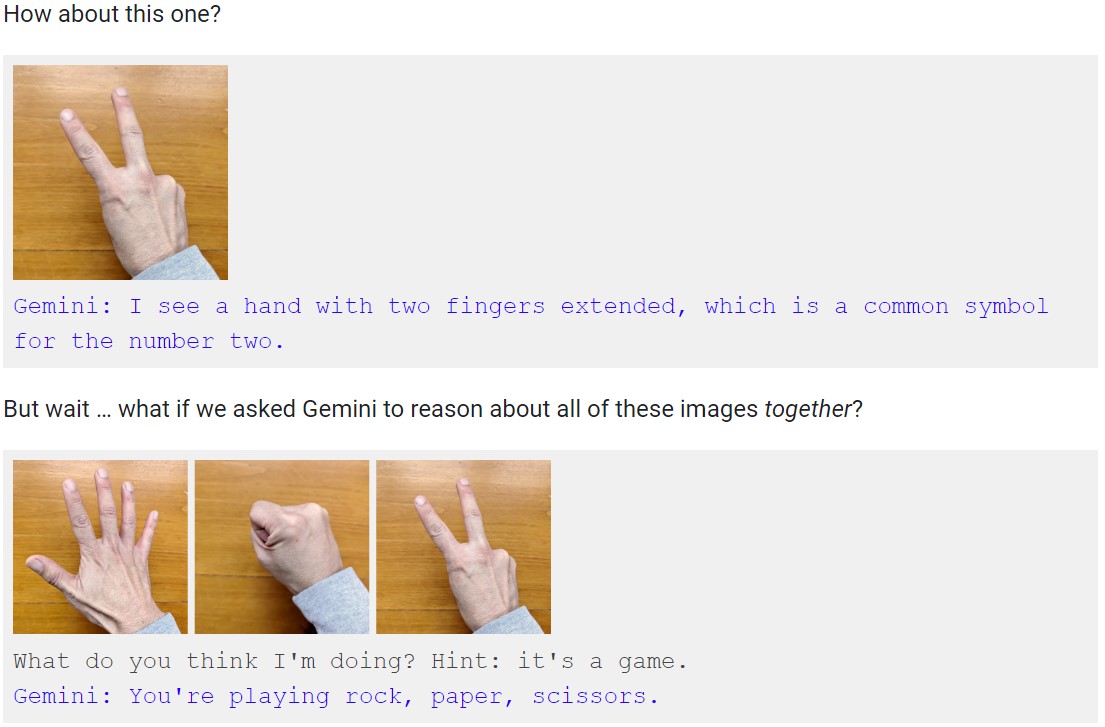

The user even motioned the game of rock, paper, and scissors and of course, Gemini promptly guesses the game correctly.

You can watch the video here:

While Gemini is undoubtedly a revolutionary AI model, the interactions depicted in the video differ significantly from real-life experiences with this model. The video clearly shows Gemini responding to a range of multimodal challenges in real-time. However, real-life interactions with this model are quite distinct.

In the video description, Google include a disclaimer: “For the purposes of this demo, latency has been reduced and Gemini outputs have been shortened for brevity.” In simpler terms, this means that the duration for each response from Gemini was longer than what was shown.

So, how was it exactly edited?

Behind the Scenes of the Video

According to Bloomberg Opinion, a Google spokesperson revealed that the video was created by using still image frames from the footage and written prompts for Gemini to respond to.

This essentially indicates that the voice in the video was responding to written prompts written to Gemini, along with still images.

This approach is a far cry from what was implied in the demonstration.

The Vice President of Research and Deep Learning lead at Google’s DeepMind and the Co-lead for Gemini explained to The Verge that all the user prompts and results in the video were real but merely shortened for brevity.

He added that the video was intended to inspire developers and showcase what multimodal user experiences could look like.

Despite the controversy, there is a silver lining: We still have some time to enjoy a semblance of normalcy before AI potentially reshapes the world, our jobs, and our livelihoods.