Imagine having a friend you haven’t met in a while jio you out for dinner.

You’re having a good time catching up until you catch them pulling out an iPad when it dawns on you.

Alas, having an old friend or acquaintance trying to sell you insurance and investment opportunities is a feeling we know all too well.

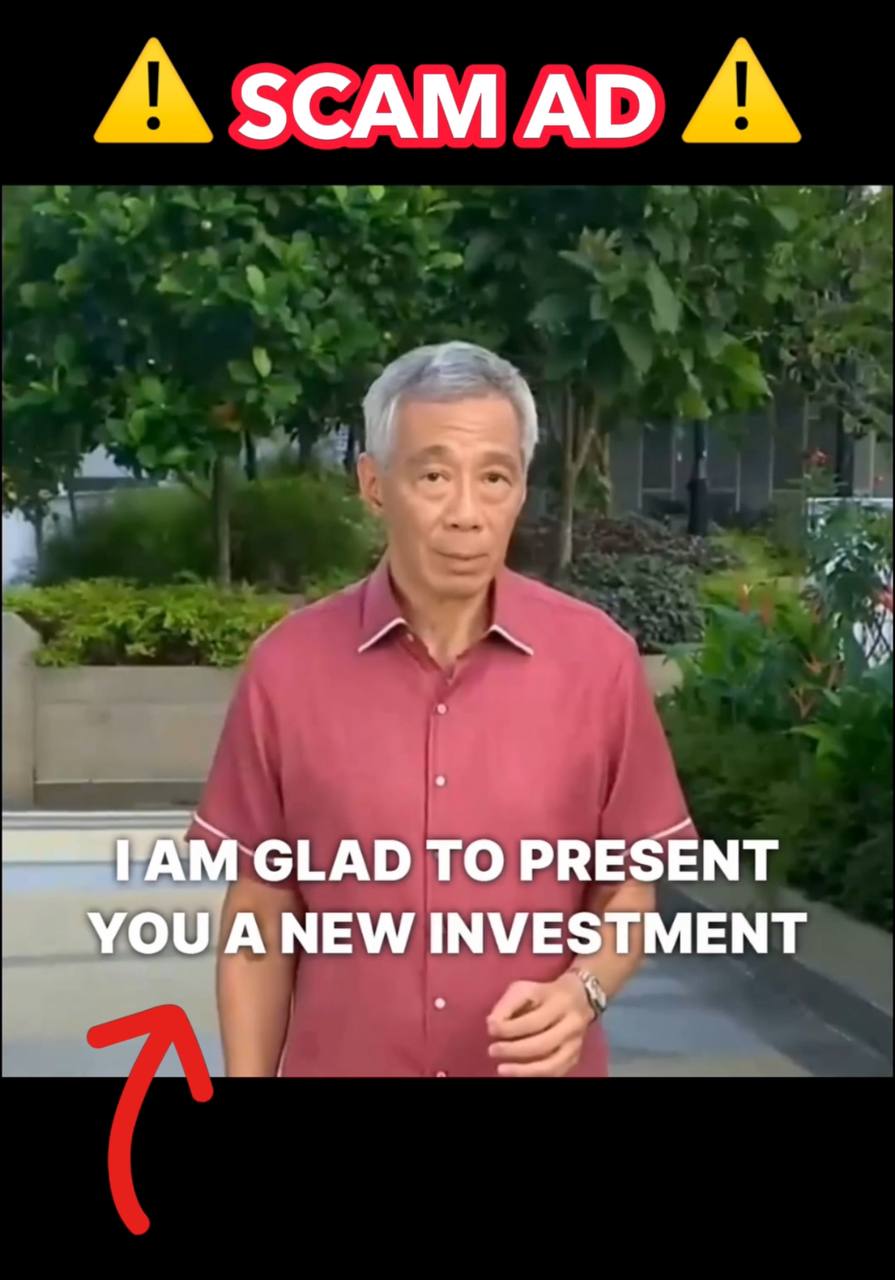

However, the last person we’d expect to get this treatment from is Senior Minister Lee Hsien Loong who was seen promoting investment products in a deepfake scam.

AI and Deepfake Technology are Becoming Better by the Day

AI will be a part of our future whether we like it or not.

And while it might not mean they’re taking over the world and holding humans hostage just yet – we still need to remain extremely vigilant as deepfake scams are on the rise.

So what exactly are deepfakes and how do they work?

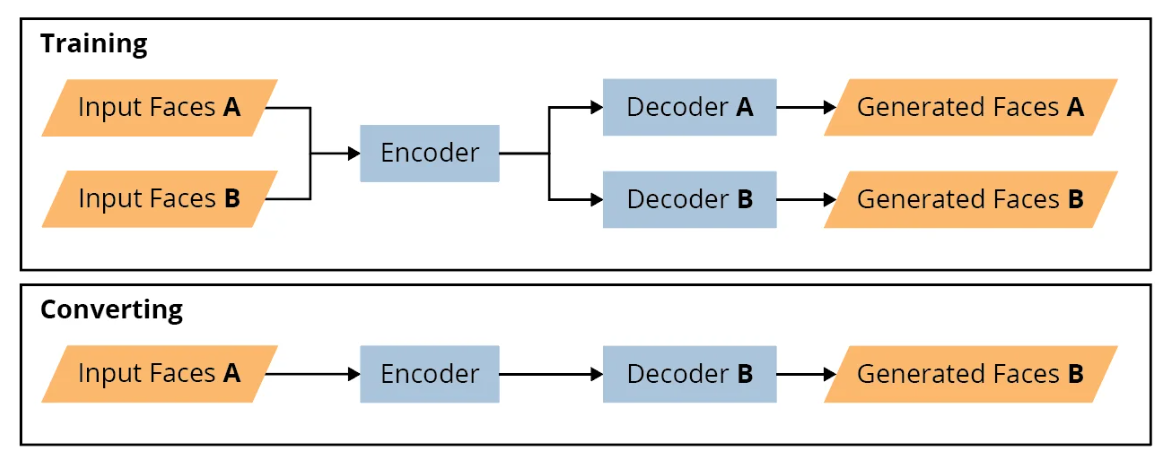

The phrase comes from combining “deep learning” and “fake” and generally refers to a photograph, video or audio manipulated by machine learning or artificial intelligence.

Deepfakes are typically realistic because they are not simply reworked through normal video editing software.

Instead, specialised applications deconstruct every minute detail of someone’s face and learn how to manipulate them to make an entirely new video – all while the algorithm maintains the original look and feel of the video.

You can watch this video for more information:

These synthetic videos can be used for good or bad, but it’s not a stretch to see how such fabrications can be extremely dangerous.

SM Lee’s Deepfake Came from his 2023 National Day Message

In a Facebook post on 2 June 2024, SM Lee brought attention to the deepfake video making its rounds online.

This fabricated video showed SM Lee “asking viewers to sign up for an investment product that claims to have guaranteed returns.”

In this particular incident, SM Lee noted that these scammers mimicked his voice and layered this fake audio over footage from him delivering last year’s National Day message.

To make it more convincing, they even synchronised his mouth movements with the audio, which SM Lee described as “extremely worrying” since “people watching the video may be fooled into thinking I really said those words.”

He continued to caution people, stating “if something sounds too good to be true, do proceed with caution.”

If any scam ads of him or any other Singapore public office holder promoting such investment products are seen, the public should not believe them, stated the Senior Minister.

He rounded off the post by urging people to report such scams via the government’s ScamShield Bot on WhatsApp.

Not the First Prominent Figure to Have Deepfake Scams

With the prevalence of AI usage today, it should come as no surprise that SM Lee is not the first victim of such deepfake scams.

This is not even the first time SM Lee himself has been subjected to these modified videos.

Just a few months ago on 29 December 2023, SM Lee shared another post stating that there have been several audio deepfake videos of him promoting crypto scams.

Deputy Prime Minister at the time, Prime Minister Lawrence Wong was also targeted.

Across borders, other prominent figures like Elon Musk, Joe Biden and Chinese President Xi Jinping have fallen victim to a wide variety of AI deepfakes.

Even Taylor Swift has had a deepfake of her promoting a Le Creuset cookware set.

Less innocent scammers have also made pornographic deepfakes of her.

This deepfake era is not one we want from the Era’s tour.

How to Stay Safe from Deepfake Scams

According to the Sumsub Identity Fraud Report 2023, there was a ten-fold increase in deepfakes detected globally across all industries from 2022 to 2023.

While this is extremely daunting, creating a deepfake that is hard to detect as fake is not easy, according to a blog post from Carnegie Mellon University.

Researchers Catherine Bernaciak and Dominic A. Ross shared that while a default gaming-type graphics processing unit (GPU) and open-source software are readily available, “the significant graphics-editing and audio-dubbing skills needed to create a believable deepfake are not common.”

A lot of time is also needed to train the AI model and to fix imperfections.

However, this does not mean we don’t need to stay alert, as AI software and technology rapidly advance.

There are many ways to spot a deepfake, as listed by antivirus and security software company Norton, with a few being:

- Unnatural eye movement – lack of eye movement like the lack of blinking

- Unnatural facial expressions – if something looks off, it might be because of facial morphing

- A lack of emotion – if their face doesn’t match what they are saying in terms of emotions

- Unnatural colouring – abnormal skin tones and weird lighting are also signs of a deepfake

- Hair and teeth that don’t look real – isolated characteristics like frizzy hair or outlines of individual teeth are not easily replicated by these algorithms

For more ways to spot deepfakes, check out our article on 5 ways to spot if a video is real or a deepfake.

Of course, this list is non-exhaustive.

More importantly, we need to be aware of these deepfake scams and remain vigilant.

Like a wise man once said, if it’s too good to be true, it probably is.