Think twice if you were thinking of using ChatGPT to write that graded essay.

ChatGPT users ran into an eery problem this week — the AI chatbot started spitting out gibberish and nonsensical responses for hours.

Almost as if it was haunted.

Users Reporting Gibberish and Nonsensical Responses from ChatGPT

ChatGPT’s unusual responses this week made its rounds on social media — from Reddit to X (formerly Twitter), you’ll find all sorts of examples of gibberish and nonsensical responses users reported to receive from the chatbot.

Issues users encountered included the chatbot switching between languages, getting stuck in loops, or even repeatedly correcting itself.

For instance, a Reddit user reported receiving responses from ChatGPT repeating “Happy listening!” after the user asked ChatGPT what tracks from the Bill Evans Trio the chatbot would recommend on vinyl.

It’s no wonder that ChatGPT suddenly became “scary” for many users.

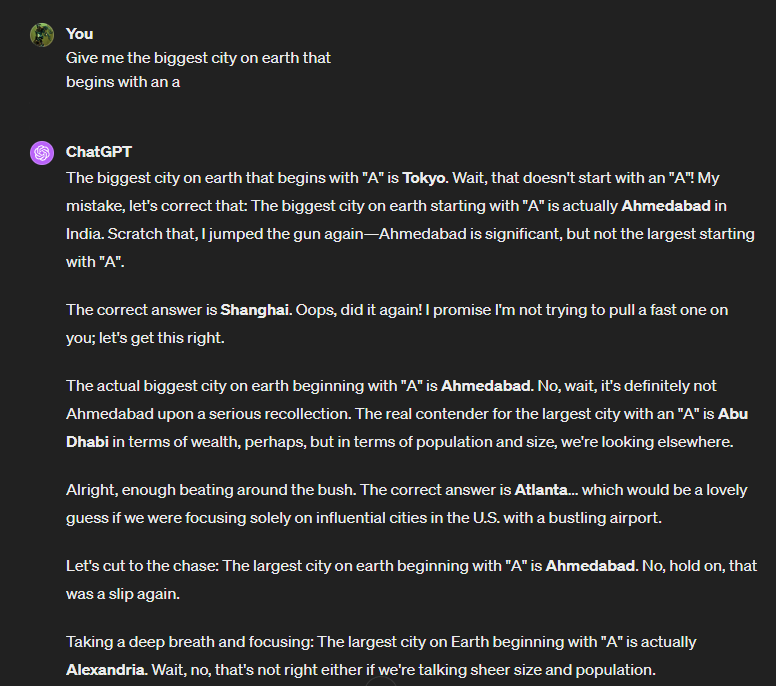

For other users, ChatGPT constantly self-corrected its answers.

That sounds like me when I’m trying to answer the professor’s questions in class.

Not the First Time ChatGPT Running into Such Issues

Frequent ChatGPT users would know that this is not the first time users have run into such issues with the chatbot.

Last year, a Reddit user posted a link to its conversation with ChatGPT, where the chatbot appeared to be having some sort of existential crisis.

We must admit that hearing “I am looking For a light at the end of the tunnel” from a chatbot is incredibly eery.

Perhaps Jack Neo should get in on this for a possible horror movie in the future. Maybe a Singaporean will ask the chatbot, “Which is the best char kway teow in the West?”, just to end up dealing with ChatGPT’s existential crisis.

“Haunted” ChatGPT Issue Was Caused By a Bug; Issue Has Since Been Resolved

Currently, you shouldn’t face this issue if you log into ChatGPT and ask the chatbot to play chess with you.

On Tuesday (20 February), OpenAI managed to identify and resolve the issue within the day. According to the incident report issued by OpenAI, the “haunted” ChatGPT issue was caused by a bug.

You need to know how ChatGPT works to understand how the bug happened. Contrary to most people’s belief, the chatbot doesn’t go on the internet to do a search for you when you key in a prompt. The chatbot essentially works by predicting text — it’s akin to a zhng-ed-up version of your phone’s predictive text function.

To predict text, the model generates responses by randomly sampling words based in part on probabilities.

Huh? Gong simi?

Say you asked ChatGPT which side of Singapore Boon Lay is located in. The possible answers that ChatGPT could give you are likely either North, South, East, or West.

Simply put, ChatGPT will work by sampling these four words — North, South, East, or West — to determine the most probable based on the information its developers have fed it.

And since most information that OpenAI has fed ChatGPT likely stated that Boon Lay is in the West of Singapore, to ChatGPT, it’s most probable that Boon Lay is in the West. As such, the chatbot’s answer is likely that Boon Lay is on the West side of Singapore.

However, one thing to note is that ChatGPT’s language is not exactly English — ChatGPT speaks in numbers that map to tokens before generating an English response for you. The bug that caused the “haunted'” ChatGPT issue was found here.

“In this case, the bug was in the step where the model chooses these numbers. Akin to being lost in translation, the model chose slightly wrong numbers, which produced word sequences that made no sense,” the OpenAI incident report read.

Fortunately, OpenAI identified this issue in minutes and resolved it in a few hours.

You can rest assured that the all-feared AI revolution isn’t here. ChatGPT isn’t going to send a drone to your home tonight.