Ever since Facebook—ahem, Meta—revamped the company’s vision and mission to that of the Metaverse, that’s all we have ever heard about.

The virtual domain is thought to be a novel innovation for the workplace of the future that fosters interconnectedness never before witnessed.

Besides drifting through virtual spaces as an avatar, or possibly having an economic marketplace within the realm, Meta’s CEO Mark Zuckerberg also announced that the company will be working on developing an AI-powered universal speech translator for languages in Metaverse.

Yes, Star Trek might want a royalty from him.

Making Hokkien Understood by Masses

True to his words, Meta introduced to the world its upcoming release of the first AI-powered speech translation system for the unwritten language, Hokkien.

The translation system will mark the start of Meta AI’s Universal Speech Translator (UST) project, which aims to create AI systems that offer real-time speech-to-speech translation in all languages, even those that are only orally spoken with no written system.

The accomplishment is all the more impressive when you realized that Hokkien lacks a widely used standard writing system (we write in Mandarin instead) and is also an underresourced language, which means there isn’t as much paired speech data available in comparison with, say Spanish or English.

Moreover, gathering and annotating data to train the model proved challenging due to the lack of human English-to-Hokkien translators.

According to Meta researcher Juan Pino, “Our team first translated English or Hokkien speech to Mandarin text, and then translated it to Hokkein or English”. To guarantee that the translation was accurate, the team also collaborated extensively with Hokkien speakers.

The paired sentences were then subsequently included in the data needed to train the AI model.

To enable others to expand on the findings, the researchers will make their model, code, and training data publicly available.

How it Works

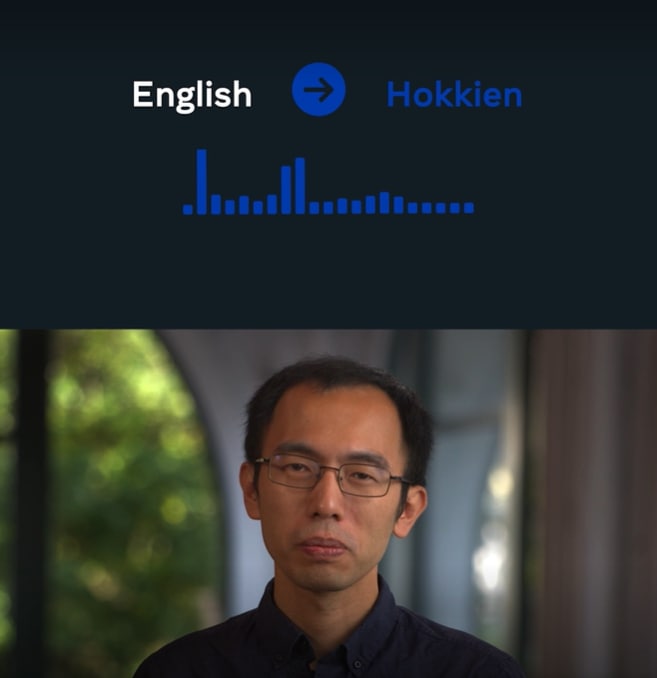

The project was spearheaded by Peng-Jen Chen, whose father speaks primarily Taiwanese Hokkien. Even though his father speaks Mandarin well, he struggles to communicate fluently with complex topics. For him, this will be his long-sought dream turned into reality.

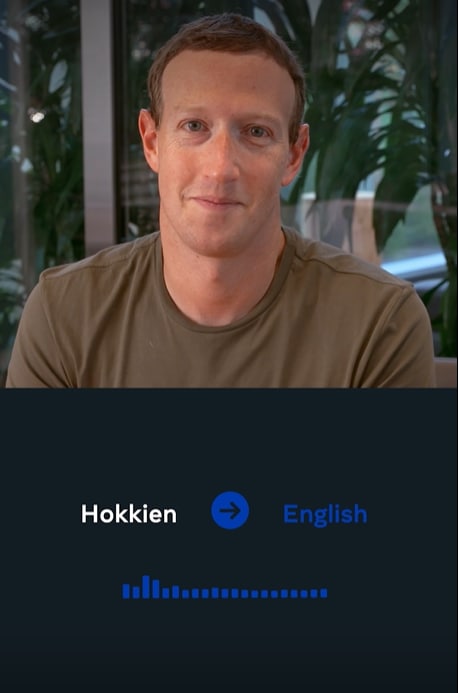

In a demonstration video released to the press, Chen and Zuckerberg showed the efficiency of the translation system.

When Chen spoke in Hokkien, the system swiftly recognized what he said and translated the message to English, which Zuckerberg was able to understand and respond back in English.

The system then again picked up the speech and translates it back to Hokkien for Chen.

Now I admit that I cannot speak Hokkien, but with my limited Hokkien listening comprehension ability, I agree that this system is the real deal.

You can watch a short demo here:

Diving into the technicality of the magic, the team used speech-to-unit-translation (S2UT) to convert input speech to a sequence of acoustic units, following the path previously pioneered by Meta.

Similarly, UnitY was utilized for a two-pass decoding mechanism where the first pass-decoder generates text in a related language (Mandarin), and the second-pass decoder generates units.

When it comes to evaluating the Hokkien translations, it is accessed using a metric know as ASR-BLEU, which entails first using Automatic Speech Recognition (ASR) to convert the translated speech into text and then computing BLEU scores by contrasting the transcribed text with a human-translated text.

I know you don’t understand what that all means: just know that it’s very smart.

As mentioned earlier, Hokkien being an unwritten language proved to be a challenge. The team then created a system that converts Hokkien speech into the standard phonetic notation called Tâi-lô in order to enable automatic evaluation.

With that, they can compute a BLEU score at the syllable level and compare the translational accuracy of various methods with ease.

Using the AI Translation Beyond the Metaverse

Let’s be honest, how many Millennials and Gen Z can speak Hokkien fluently? And no, profanity doesn’t count.

To be fair, the dialect is not practiced in our workforce, and there are no necessary reasons to learn it. However, within the healthcare industry, the dialect AI translation may be the saving grace in understanding dialect-speaking only silver citizens.

And on a smaller scale, grandchildren can foster stronger ties with their dialect-speaking grandparents who like Chen’s father, have difficulty expressing themselves in Mandarin sometimes.

Hopefully, for us, reunion dinners will see a merrier exchange between the grandchildren and grandparents, rather than a “How are you?”

You can try out the demo here.

Read Also:

- Terra Founder Do Kwon Appeared in First Public Interview & Claimed His Charges Are ‘Politically Motivated’

- Ministry of Food Founder Lena Sim Has Officially Been Declared Bankrupt

- 19YO Accused of Killing His Father in Yishun Going for Psychiatric Assessment for 3 Weeks

- ICA Warns of Congestion in Both Checkpoints This Long Weekend

- NUS Undergraduate Carissa Yap Won Miss Universe Singapore 2022 & Will Represent S’pore Next Year

Featured Image: Meta